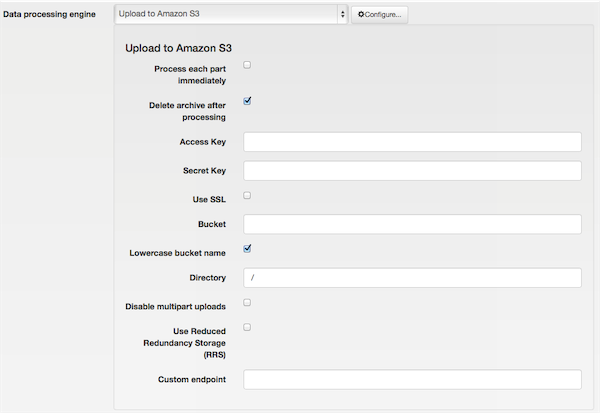

If you've followed the instructions so far, it's Amazon S3 setup time! In the Configuration page, right below the Archiver Engine setting there's another setting called Data processing engine. Use the drop-down to select the Upload to Amazon S3 value and then click the button titled next to it. You should now see something like this:

Setting up the Amazon S3 engine

In this configuration details pane you have to enter your Amazon S3 Access key and Private key.

These are created by you in your Amazon S3 Console. If you have never done this, it's easy. Click on your name towards the top of the page and then click on Security Credentials. Follow the instructions on that page to create a new Access and Private key pair.

Back to our configuration page, checking the Use SSL setting will make your data transfer over a secure, encrypted connection at the price of taking a little longer to process. We recommend turning it on anyway. The Bucket setting defines the Amazon S3 bucket you are going to use to store your backup into. You also need to set the Amazon S3 Region to the region where your bucket was created in. If you are not sure, go to the Amazon S3 management console, right click on your bucket and click on Properties. The properties pane opens to the right and you will see the Region of your bucket. Back to our software, the Directory setting defines a directory inside the bucket where you want the backup files stored and must have been already created.

![[Warning]](/media/com_docimport/admonition/warning.png) | Warning |

|---|---|

|

DO NOT CREATE BUCKETS WITH NAMES CONTAINING UPPERCASE LETTERS. AMAZON CLEARLY WARNS AGAINST DOING THAT. If you use a bucket with uppercase letters in its name it is very possible that Akeeba Solo / Akeeba Backup will not be able to upload anything to it. More specifically, it seems that if your web server is located in Europe, you will be unable to use a bucket with uppercase letters in its name. If your server is in the US, you will most likely be able to use such a bucket. Your mileage may vary. Please note that this is a limitation imposed by Amazon itself. It is not something we can "fix" in Akeeba Solo / Akeeba Backup. Moreover, you cannot use a bucket name with a dot in its filename together with the Use SSL option. This is a limitation of the SSL setup in Amazon S3 servers and cannot be worked around, especially for EU-hosted buckets. |

Do note that as per S3 standards the path separator for the

directory is the forward slash. For example, writing

first_level\second_level is wrong, whereas

first_level/second_level is the correct form.

We recommend using one bucket for nothing but site backups, with one

directory per site or subdomain you intend to backup. If you want to

use a first-level directory, just type in its name without a

trailing or leading forward slash.

![[Tip]](/media/com_docimport/admonition/tip.png) | Tip |

|---|---|

|

Should you need a visual interface for creating and managing Amazon S3 buckets, you can do so through your Amazon S3 console. |

Click on to store the changed settings. Back to the Akeeba Solo / Akeeba Backup Professional main page, click on the icon.

Ignore any warning about the Default output directory in use. We don't need to care about it; our backup archives will end up securely stored on Amazon S3 anyway. Just click on the big button and sit back. The upload to Amazon S3 takes place in the final step of the process, titled Finalizing the backup process. If you get an error at this stage it means that you have to go back to the configuration and lower the Part size for archive splitting setting.

![[Important]](/media/com_docimport/admonition/important.png) | Important |

|---|---|

|

On local testing servers you will have to use ridiculously small part sizes, in the area of 1-5Mb, as the xDSL consumer Internet service has a much more limited upload rate than most hosts. |

![[Warning]](/media/com_docimport/admonition/warning.png) | Warning |

|---|---|

|

If you get a RequestTimeout warning while Akeeba Solo / Akeeba Backup is trying to upload your backup archive to the cloud, you MUST go to the Configuration engine and enable the "Disable multipart uploads" option of the S3 engine. If you don't do that, the upload will not work. You will also have to use a relatively small part size for archive splitting, around 10-20Mb (depends on the host, your mileage may vary). |